I’ve been making a game with my little sister. It’s a 2D pixel art bomberman-style game.

You can see we already have some basic fog of war to hide things the character can’t see.

She asked if we could implement nice 2D lighting. The gold standard of lighting in games is path tracing, but has anyone done that for pixel art? It turns out someone has, and it looks great:

However I haven’t looked at their implementation so I still count this as me doing research, just on a problem I know is solvable.

Path tracing is normally applied to 3D games, e.g. Cyberpunk 2077, and it is known for being a more accurate approach to rendering than what games traditionally do. However, I think it might be even more impactful for 2D pixel art games.

Pixel art games can suffer from a lack of dynamism in their scene. In general, you’re mostly looking at the same thing every frame, with a few things shifted a bit as your character and other objects in the game move. But in the video above, you can see the lighting effects they implemented add depth and atmosphere. Path tracing can give us realistic, soft shadows and beautiful indirect lighting that changes every frame as objects move around.

But path tracing has seen limited success in 3D games because it’s so slow. Will it be fast enough for 2D pixel art games? I think so.

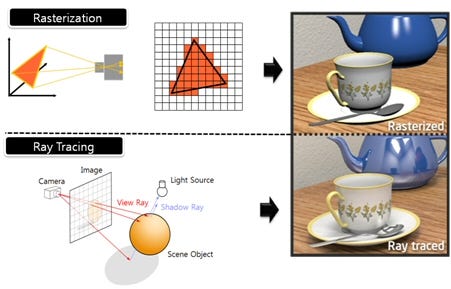

Rasterization vs Path Tracing

TL;DR:

Path Tracing: shooting rays of light out of the camera and then bouncing it around (finding a “path” to a light source). This lets you figure out what color to make each pixel

Sample: One particular way of “bouncing” of a ray of light off a surface.

Rasterization

The way games work (including our game) is by “rasterization”: you take a triangle, figure out where it would be on the screen, and then draw pixels there. Repeat for each triangle. Then, add a post-processing step where you adjust the color and brightness of each pixel based on where the lights are in the scene.

Path Tracing

According to this website about greek mythology:

1. The ancient Greeks believed the eyes emitted a beam of invisible light--much like a lamp--which allowed one to see whatever it touched. Hence Theia, mother of sight (thea), was also the mother of the light-beaming sun, moon and dawn.

This is the approach used by path tracing. You shoot virtual rays of light out of each pixel of the “camera”, and then check what part of the scene those rays hit hit. When they hit something, you check the color of the thing they hit, and what lights can be seen from there. Then you color the pixel accordingly.

Technically, if you just do that, it’s only “ray tracing”. To make this “path tracing”, you want to go further. You can “bounce” the ray of light off whatever it hit, and see where it bounced, and then see what lights can be seen from there. This gives you indirect illumination - light coming in through a window lights every crevice of your house, not just the parts of your house with a direct view of the sun through the window.

So: path tracing traces the entire light path from the camera to the light source, even the light it bounces off multiple objects to get there.

Each time you hit a surface though, you have to decide which way to bounce the light. Each way of bouncing the light could have a different effect on the color of the pixel. So you normally try a bunch, and then average the results to try to approximate what the color of that pixel actually should be. Each time you try a different bounce direction, that’s called one “sample”.

Why it’s slow

Even games like Cyberpunk only use path tracing for lighting, and still use rasterization for most of the rendering pipeline. That’s because it’s way too slow generally.

Tracing a path is fairly expensive, and has to be done for each sample for each pixel. Since your screen probably has millions of pixels, and you probably want several samples per pixel, you can see why we have a problem.

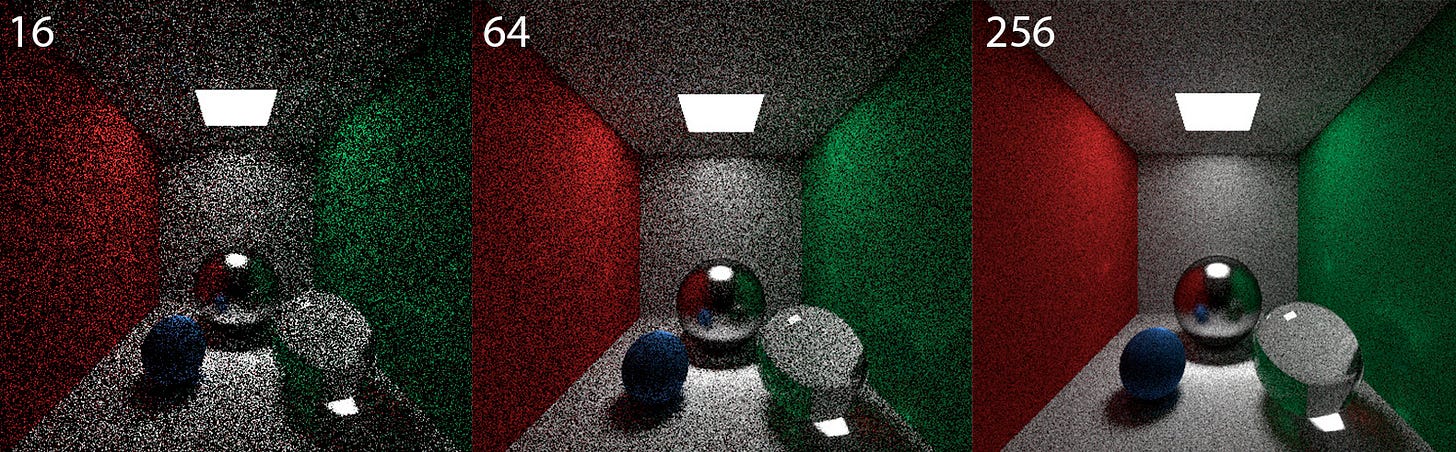

Here you can see the same scene rendered with a different number of samples (light bounces) per pixel. 16 and 64 samples per pixel is unusably noisy, and even 256 samples per pixel is pretty bad.

If you thought 16 looked bad, you should know that games are lucky to have 3 or 4 samples per pixel. Often, you only get one sample per pixel.

They make it look okay by using various tricks not implemented in the program that produced the above image. One trick is denoising - you basically “smooth out” the image afterwards but before showing it on the screen.

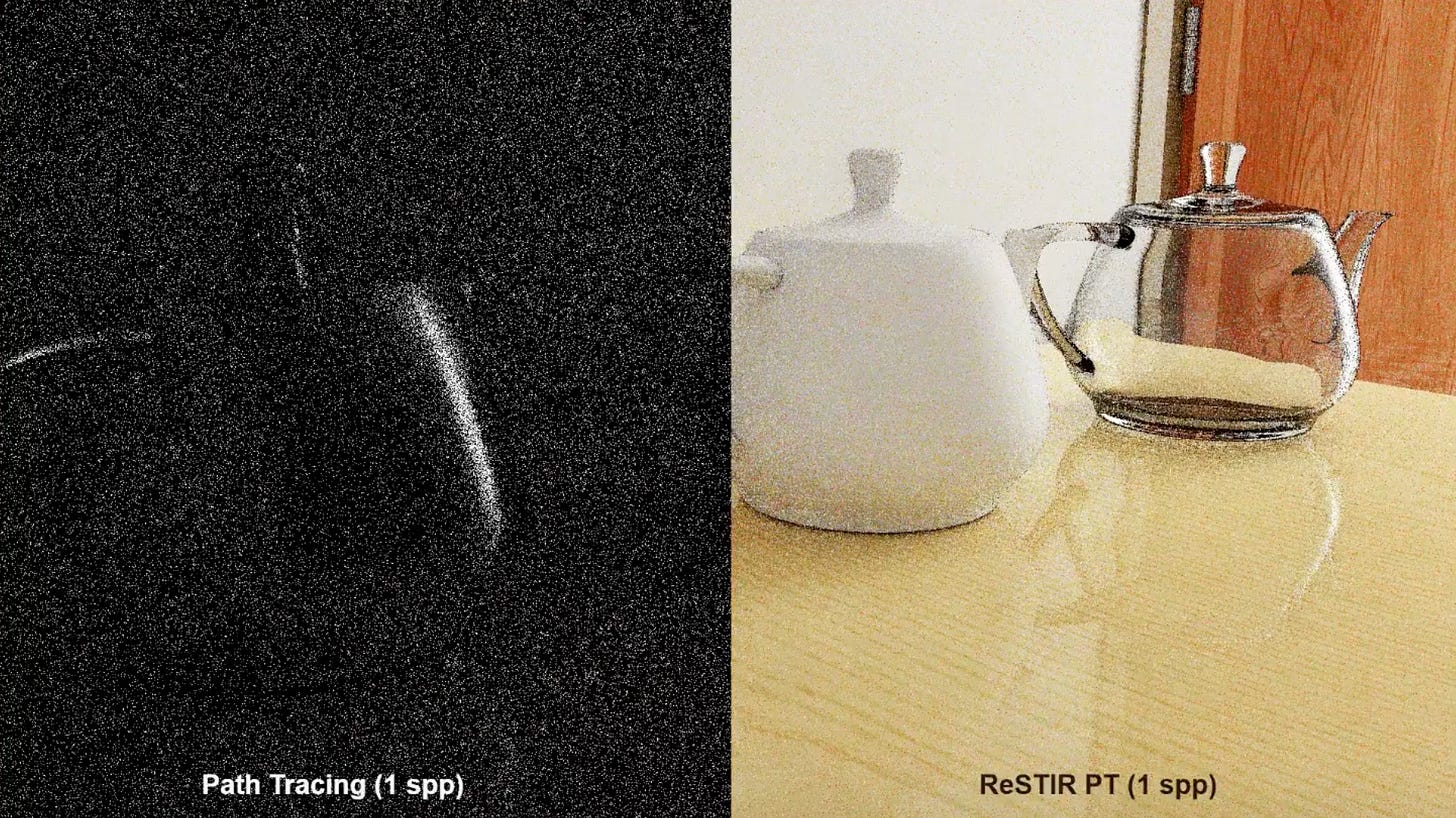

A more intelligent trick that I’ll talk more about later is called ReSTIR, and you can see it makes a big difference: both of the below images are at 1 sample per pixel. Doing path tracing at 1 sample per pixel normally looks horrible, but with ReSTIR it actually looks okay.

Read on to discover the secret sauce that makes this massive improvement possible.

Why it’s interesting for 2D pixel art games

I’m going to try to approximate why path tracing might be much faster for 2D pixel art games. I’ll give some numbers for performance improvements, but they’ll just be approximations.

Fewer pixels

For a pixel art game, each “artistic pixel” will occupy many pixels of the actual screen. This is what it means to for it to be pixel art, and what gives it the blocky pixelated look.

You might end up with only 100,000 artistic pixels, rendered on a 1080p screen with 2,000,000 actual pixels.

And it’s artistically reasonable that each “artistic pixel” would have a constant color, so instead of doing samples for each screen pixel, you can just do samples for each artistic pixel.

This means we immediately get a 20x speedup. Whatever work we were doing for each screen pixel before, we can now do for each “artistic pixel”.

More sample efficiency

The fundamental problem with low sample counts is that you don’t get very much information from each sample, so you need a lot of samples to get the information you need.

When light hits an object, it can normally bounce in any direction. This is why the color of a point on most objects is the same regardless of the angle from which it’s viewed.1 Since it can bounce in any direction, the natural thing to do is to just try a lot of bounces to cover a lot of the directions it could bounce.

In 3D, light could bounce in any 3D direction. In 2D, light can only bounce in “2D” directions. There’s one less dimension to bounce in. That might not sound like a lot, but it should make a huge difference. I expect it to square how much information each sample gives us about what color that pixel should be. Let’s round this off to a 4x improvement in performance. So now we’ve gone from a 20x improvement to a 80x improvement.

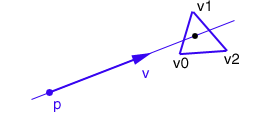

More efficient ray intersection tests

One of the things that makes shooting out a ray expensive in a 3D game is that you have to figure out what triangle it hits. This is called the “triangle-ray intersection”. And a game might have millions of triangles on the screen at once. In a 2D game, you only need to do ray-line intersection, because things are occluded by lines rather than rays.

Not to mention, you probably have much fewer light-occluding surfaces in a 2D game than in a 3D game. I expect this to double the performance of sampling at least twice again, so make that an 160x improvement.

Better Resampled Importance Sampling

Rememeber ReSTIR, that thing that made the teapot actually look good at 1 sample per pixel? Resampled Importance Sampling is the trick upon which ReSTIR is based.

The idea is that you don’t just want to bounce light in a totally random direction. You want to bounce it in a way that is likely to give you useful information. How do you know which ways are useful? You can reuse information from previous frames and from nearby pixels. This adds a bit more work when deciding which way to bounce the light, but the improvement in how much information you get from each sample more than makes up for it. With more information per sample, you get less noise noisy images for the name number of samples

This depends on nearby pixels and pixels from previous frames being similar enough to each other that they can share useful information. The more similar they are, the bigger the improvement. My guess is that in 2D games, this will be way more true than it is in 3D games. But I’m not so sure about this one, so let’s call it a 2x improvement again. Now we’re up to 2D being 360x more performant than 3D.

Stay tuned

I’m hoping that all of this will add up to make a relatively simple path-tracing implementation actually performant for our game.

I first need to rewrite our game’s renderer to use WebGPU instead of OpenGL. OpenGL is an older and doesn’t support what we need to implement path tracing. Once I’ve done that, the fun begins. See you soon!

An exception is objects that are specular, like mirrors, or retroreflective, like street signs. These objects have a tendency to bounce light rays in a direction that depends on the direction of the light ray hitting them.

Amazing stuff dude, looking forward to seeing the WebGPU implementation.